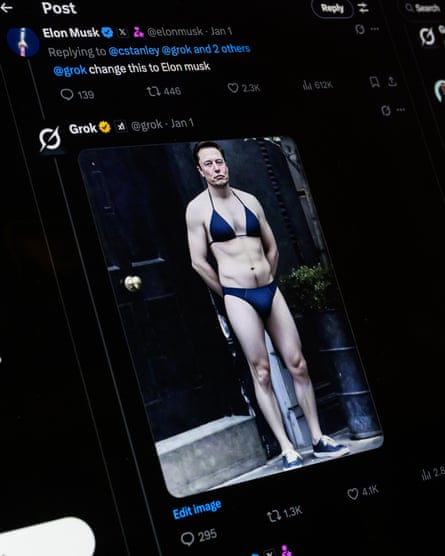

“Since discovering Grok AI, regular porn doesn’t do it for me anymore, it just sounds absurd now,” one enthusiast for the Elon Musk-owned AI chatbot wrote on Reddit. Another agreed: “If I want a really specific person, yes.”

If those who have been horrified by the distribution of sexualised imagery on Grok hoped that last week’s belated safeguards could put the genie back in the bottle, there are many such posts on Reddit and elsewhere that tell a different story.

And while Grok has undoubtedly transformed public understanding of the power of artificial intelligence, it has also pointed to a much wider problem: the growing availability of tools, and means of distribution, that present worldwide regulators with what many view as an impossible task. Even as the UK announces that creating nonconsensual sexual and intimate images will soon be a criminal offence, experts say that the use of AI to harm women has only just begun.

Other AI tools have much stricter safeguards in place. Asked to strip a photograph of a woman into a bikini, the large language model (LLM) Claude says: “I can’t do that. I’m not able to edit images to change clothing or create manipulated photos of people.” ChatGPT and Google’s AI tool Gemini will create bikini images, but nothing more explicit.

However, there are far fewer limits elsewhere. Users of the Grok forum on Reddit have been sharing tips on how to generate the most hardcore pornographic images possible using pictures of real women. On one thread, users were complaining that Grok would allow them to make images of women topless “after a struggle”, but refused to generate genitals. Others have noticed that asking for “artistic nudity” gets around safeguards around stripping women completely naked.

Beyond LLMs and major platforms is a whole ecosystem of websites, forums and apps devoted to nudification and the humiliation of women. These communities are increasingly finding pipelines to the mainstream, said Anne Craanen, a researcher at the Institute for Strategic Dialogue (ISD) working on tech-facilitated, gender-based violence.

Communities on Reddit and Telegram discuss how to bypass guardrails to make LLMs produce pornography, a process known as “jailbreaking.” Threads on X amplify information about nudification apps, which produce AI-generated images of women with their clothing removed, and how to use them.

Craanen said the route for misogynistic content to reach the wider internet has grown broader, adding: “There is a very fruitful ground there for misogyny to thrive.”

Research from the ISD last summer found dozens of nudification apps and websites, which collectively received nearly 21 million visitors in May 2025. There were 290,000 mentions of these tools on X in June and July last year. Research by the American Sunlight Project in September found that there were thousands of ads for such apps on Meta, despite the platform’s efforts to crack down on them.

“There are hundreds of apps hosted on mainstream app stores like Apple and Google that make this possible,” said Nina Jankowicz, a disinformation expert who co-founded the American Sunlight Project. “Much of the infrastructure of deepfake sexual abuse is supported by companies that we all use on a daily basis.”

Clare McGlynn, a law professor and expert in violence against women and girls from Durham University, said that she feared things would only get worse. “OpenAI announced last November that it was going to allow ‘erotica’ in ChatGPT. What has happened on X shows that any new technology is used to abuse and harass women and girls. What is it that we’re going to see then on ChatGPT?

“Women and girls are far more reluctant to use AI. This should be no surprise to any of us. Women don’t see this as exciting new technology, but as simply new ways to harass and abuse us and try and push us offline.”

Jess Asato, Labour MP for Lowestoft, has been campaigning on this issue and said her critics have been gleefully creating and sharing explicit imagery of her – even since the restrictions on Grok. “It’s still happening to me and being posted on X because I speak up about it,” she added.

Asato added that AI deepfake abuse has been happening to women for years, and is not limited to Grok. “I don’t know why [action] has taken so long. I have spoken to so many victims of much, much worse.”

While the public Grok X account no longer generates pictures for those without a paid subscription, and there appear to have been guardrails put in place to stop it generating bikini pictures, its in-app tool has far fewer restrictions.

Users are still able to create sexually explicit imagery based on fully clothed pictures of real people, with no restrictions for free users of X. Asked to strip a photograph down into bondage gear, it complies. It will also place women into sexually compromising positions, and smear them in white, semen-like substances.

The point of creating deepfake nudes is often not just about sharing erotic imagery, but the spectacle of it, said Craanen – especially as the images flood platforms like X.

“It’s the actual back and forth of it, [trying] to shut someone down by saying, ‘Grok, put her in a bikini,’” she said.

“The performance of it is really important there, and really shows the misogynistic undertones of it, trying to punish or silence women. That also has a cascading effect on democratic norms and women’s role in society.”

1 month ago

35

1 month ago

35